Index:

UPDATED ✅ Do you want to know the origin, history and evolution of computers and their generations? ⭐ ENTER HERE ⭐ and discover the types ✅ EASY and FAST ✅

The world of computing had its beginning almost a century ago. Everything begins specifically in the year 1939 although, as is obvious and as always happens, the ideas related to computing come from much earlier, of the desire to digitize functions of devices and power, with it, to carry out operations in a simpler way. This is how what is known as “Computer Generation”.

This is a concept that encompasses, as we say, practically a century of study, invention and development of all kinds of concepts (and, with them, machines) of computing, first analog, then hybrid, and finally digital.

This includes, so to speak, a total of, six volumes into which the history of computing is divided to date and which are perfectly differentiated by one or more elements that definedthese generations. We will review all of them to find out what the history of the computer generation has been.

Origin and history of the computer generation

We will begin by indicating that when we speak of generations of computers, we do not do so only to refer to what we now understand as our desktop or laptop computers. But we will go much further, before its appearance.

First generation (1940-1958)

Silk from the year 1940 to 1958, always considering moments of launches or big events and not the previous moments of study. Which, have often been hidden in a premeditated way so that the competition did not know what was being worked on.

Obviously, the milestone that makes us speak of a first generation of computers is the step from performing calculations manually or semi-manually to doing it digitally and automatically.

In addition, we have other characteristic aspects such as:

- The immense size of these computing machines, several square meters (or even cubic meters, since they were considerably tall too).

- Its construction with systems of vacuum valves to carry out the replacement of moving mechanical parts.

- The use of the so-called “machine language“.

- These machines they had a precise goalbeing sued by academies and military centers essentially.

Neumann, Mauchly and Aiken, among others, leave us jewels such as the Z1, the first combined, the Colossus, created to communicate during World War II, the ENIAC, which included the CPU as we know it. Or the MARK I, main computational machines of these years.

Second generation (1958-1964)

This, very small, extends from the year 1958 to 1964. What defines it is use of transistors in the computers to end up replacing the vacuum valves, which occupied about 200 times more.

- As you can imagine, this would be a large reduction in the size of the machines. They also consume less and produce less heat.

- All this means that we can now speak of the birth of the minicomputer.

- These equipments begin to be used in more sectorsespecially in banking and accounting and warehouse logistics in general.

- We see that it develops, in 1959, the microprogramming.

- are also beginning to be used more complex languages, called “high level”.

- The commercialization of COBOL was very famous.

Unforgettable personalities of this stage as the multi-awarded Amdalwho was at the helm of the design of the IBM 360 series, a set of machines with the same software, but different specifications that allowed the user to get the most suitable to meet their needs. Bardeen, Brattain and Shockleywho worked to invent the transistor and M Wilkeswho developed microprogramming.

As for the computers that stand out in these years, we have the IBM 1401which is considered the most successful machine in history (selling 12,000 units), the PDP-1designed to be used by workers and not by mathematicians and engineers, the IBM Stretchwhich was the first supercomputer that included a complete system of transistors or the already mentioned IBM 360 series.

Third generation (1965-1971)

In this case, marking the third generation, spanning the years from 1965 to 1971of computers is the use of silicon wafer circuits to perform information processing, encompassing the previous transistors and other elements.

- This is how it was achieved more capable processing in less space and with fewer independent elements, which favors the reduction of incidents.

- It is very remarkable that computers start to be used, “on a regular basis”, with business purposes. We can say that during these years the devices become more “accessible”being known all over the world and having features that make it useful for a larger audience.

- improve reliability and they are more flexible.

- Teleprocessing and multiprogramming become common.

- In addition, we are beginning to talk about the computer on a personal level.

The IBM 360 series, already important in the previous stage, would be the first to include integrated circuits. The PDP-8 also stands out, a minicomputer for social use that sold half a million copies and that worked with three programming languages. The PDP-11, profitable for a whole decade, it was the first to have a single, asynchronous bus on which to connect all its parts.

Personalities such as Kilby inventor and developer of the integrated circuit, and Noycewho solved the initial problems of this, ted hoffwho invented the microprocessor, of special importance years later, and Kurtz and Kennedywho developed the universal BASIC language.

Fourth generation (1971-1980)

We advance to the year 1980 in a context in which the big step is the replacement of conventional processors by microprocessors.

- This supposes a new miniaturization of many of the parts of the computer.

- There is also a multiplication in power, capacity and flexibility.

- To the point of appearing and putting on sale the personal computerswhich occurs in the year 1977.

Other aspects of the decade to be highlighted are the appearance of the graphical interface, the coining of the term “microcomputer”, the interconnection in networks to make use of the share of resources and the development of the capacities of supercomputers.

To achieve this, experts such as ted hoff who, as we have already anticipated, was the brain that developed the concept of the microprocessor. Kemeny and Kurtzwhich continued to be referents due to their use, growing and more successful version of its programming language. The firm Intel, which launched the first microprocessor in history, Bill Gatesface of Altair Basic, the famous and revolutionary interpreter of BASIC and Wozniaka child prodigy capable of inventing all kinds of devices and improving them until they practically become others.

During the 70 unforgettable teams appear. The first is Cray-1the first supercomputer to use a microprocessor. PDP-11already revolutionary before, continued to be talked about by being so good that, instead of designing another piece of equipment to offer the novelties of the sector, work was done on it to include them, keeping it on the market, and with great success.

Altair 8800 is the best selling microprocessor computer (perhaps, in part, for being sold together with a mouse and keyboard), in this case, a 16-bit Intel 8080. The Apple II family revolutionizes the market by launching as a useful device for the home user, including benefits that come with the spreadsheet; Interestingly, the brand started out very affordable.

Fifth generation (1981-2000)

This is indeed a strange generation of computers. This is because it is described in two very different ways. On the one hand, it speaks of the moment when Japan undertakes a project, total failure, on improvements in computing related to artificial intelligence.

This was carried out starting in 1982 and lasted more than a decade, until it was considered unfeasible to continue with it considering the millionaire cost in resources and the results, which were negative, to say the least.

However, we must give this project a big round of applause because, in fact, and as you may be thinking right now, the Japanese set their sights on exactly the point where it seems that the technology is going to be based during the next few years. centuries.

On the other hand, we can say that, aside from this project, what defines this long period of time or, rather, what makes us consider it as a different one, is the laptop development.

Of course, for so many years and at such an advanced moment, much more has been achieved in terms of computing and information technology in general.

Next, we expose the milestones achieved in these wonderful years:

- They exponentially increase the speed and amount of memory available in teams.

- Languages are translated immediately.

- A number of ports are beginning to be introduced in computers and, with this, the possibilities are multiplied and a further customization of these. The most popular and most important are, without a doubt, storage devices.

- Computers can once again be designed to be even smaller.

- software multiplyappearing already of all kinds and of all levels of complexity.

- All this makes the fashion of cloning famous equipment come back.

- Multimedia content stands out above the rest.

The inventions of the moment were the Osborne 1 portable microcomputer, the first to be presented at a fair, the Epson HX.20.

Another much more functional laptop, with dual processor and microcassette storagethe flexible, thin and removable disk, ready to store information and be able to carry it with us in a comfortable way and Windows 95, without a doubt, the best known on the planet.

Sixth generation (2000 – To date)

We are in what is known as the sixth generation of computers, a stage in which there is no general characteristic but we find a lot of everything in terms of quantity and quality.

What we do find as a turning point to begin the stage is wireless connectivity that allows us to be connected to networks and other devices no need to use cables.

We will highlight points such as:

- The development of other smart appliancesfirst phones and then many others such as televisions, watches and even household appliances.

- A brutal offer of devices for all tastes and needs.

- Internet as a regular element and, in fact, necessary in the day to day of the whole world.

- Making cloud services available to all users.

- The popularization of streaming content.

- Online commerce is also developing considerably until it becomes, in fact, a standard.

- A dizzying leap in terms of artificial intelligence.

- Use of vector and parallel architectures for computers.

- The storage volume of both internal and external memories stands out and becomes important.

The inventions and events of this millennium are the Wi-Fi, fiber optics, storage drive capacity, SSD hard drives, smartphones, mobile operating systems, much smaller laptops and those that are already known as “desktop laptops” for their incredible features, identical to those of PCs.

What will the future of computing hold for us?

The era of digital transformation has begun and the first cobblestones are being laid to design a fairly long path, but one that leads us squarely to a future where computing will take the first position and this market will be the most powerful in the world.

Surely you are wondering how these changes will affect each one. Well, we are going to analyze each proposal and the implication it will have on our lives.

Augmented Analytics (Big Data)

The supercomputers they work with a large amount of data and it is not always possible explore all existing possibilities. Therefore, data analysts do not always work all the hypotheses.

It is considered that many options are being lost and information of great interest to us is being avoided. That is why augmented analytics arises, which aims to search for a new point of understanding of the data. In addition, being a machine that takes care of it, eliminate personal choices to review the most hidden patterns.

It has been concluded that by 2020 more than 40% of data analysis tasks will have been automated, so human management of data will go to another level.

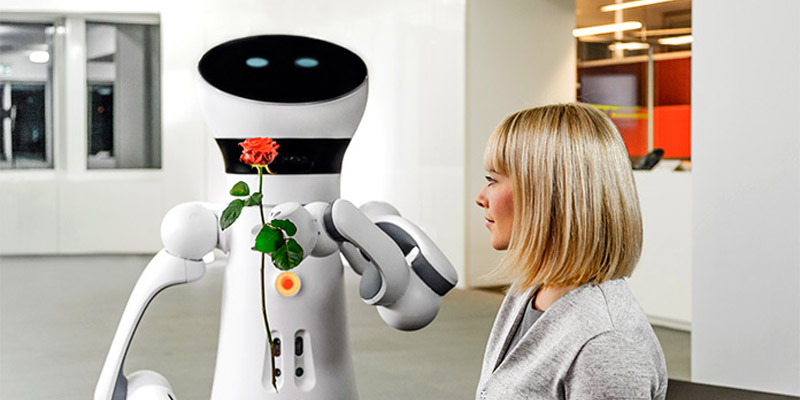

Artificial intelligence

The new solutions created for companies and industry will have an artificial intelligence base. Data analysis, testing, code generation in programming, and the program development process will be automated. In this way, it will not be so necessary to be a programming specialist to work in this branch.

Solutions generated based on artificial intelligence will be faster and faster, without errors or rehearsals. In fact, time will be a much more precious commodity than it is now, as well as easier to obtain, something paradoxical.

autonomy of things

Artificial intelligence will have a very important role in the future, but not only at the company level but also individually. This is because robots will be programmed to perform simple tasks, imitating the actions performed by humans. This technology will be increasingly sophisticated, so it will require applications, IoT objects and services that can help automate human processes.

Changes in the Facebook network

The network will stop having so many ads to finance itself and will start promoting the cryptocurrency. To keep getting the attention of your subscribers, will continue to develop their own content, buying rights to broadcast content and deploy projects that are based on the blockchain; the power of the social platform and the ability it has to turn where it suits you is a little scary.

blockchain

Blockchain technology allows companies to trace a transaction, so they can also work with untrusted parties without requiring the intervention of a bank.

blockchain reduces transaction management costs and times, achieving an improvement in cash transmission. It is estimated that the blockchain will move approximately 3.1 trillion dollars in a decade.

Emergence of digital twins

A digital twin consists of a digital representation of an object that exists or will exist in real life. Hence, they can be used to represent certain designs on another scale. This idea goes back to the representation of designs, aided by a computer.

Digital twins are much more robust and they are used, above all, to answer questions such as “what would happen if…?“Only in this way can help solve costly errors that occur today in real life. The twins will be the official testers.

Emergence of immersive technologies

They are going to change the multiple ways a user can interact with the world. It’s not just augmented reality (AR), virtual reality (VR) and mixed reality (MR) that will be used anymore; 70% of companies will offer these types of technologies for use by consumers.

Thanks to them, conversation platforms will change, including chatbots, personal assistants and sensory channels able to detect emotions based on facial expressions and tone of voice.

Increased number of smart spaces

A smart space is a physical or digital zone that is bounded and that allows humans and technology to interact in a positive way and in a connected and coordinated way.

The concept of smart cities will emerge, creating smart frameworks of urban ecosystems. The concept of smart cities is assumed and the way to improve these spaces in social and community collaboration is sought.

quantum computing

It will be used for improve results related to digital calculations as well as artificial intelligence. More specific services like these require, of course, a design that is also much more exclusive, requiring computation with quanta.

Hardware